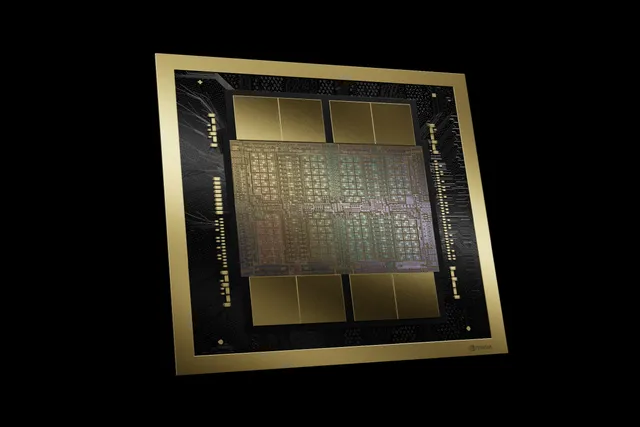

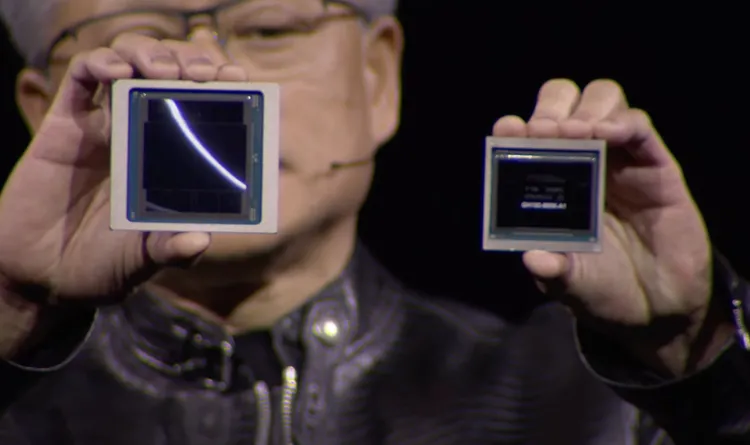

Companies have been battling to catch up to Nvidia, which became a multitrillion-dollar corporation thanks to its indispensable H100 AI processor. Its valuation may surpass that of Alphabet and Amazon. Yet with the release of the Blackwell B200 GPU and GB200 “super chip,” Nvidia could overtake this competitor.

Its 208 billion transistors, according to Nvidia, can provide up to 20 petaflops of FP4 processing power. It adds that a GB200 that combines two of those GPUs with a single Grace CPU might be far more efficient and provide 30 times the performance for LLM inference applications. comparison to an H100, Nvidia claims that it “reduces cost and energy consumption by up to 25x.”

According to Nvidia, it would have previously required 8,000 Hopper GPUs and 15 megawatts of electricity to train a 1.8 trillion parameter model. According to Nvidia’s CEO 2,000 Blackwell GPUs can accomplish this with just four megawatts of power use.

Nvidia claims that the GB200 delivers four times the training speed and a considerably more modest seven times the performance of an H100 on a GPT-3 LLM benchmark with 175 billion parameters.

One of the main enhancements, to Nvidia, is a second-generation transformer engine that uses four bits instead of eight for each neuron, doubling computation, bandwidth, and model size (hence, the 20 petaflops of FP4 I mentioned before). When you connect the large number of these GPUs, a second significant difference becomes available: a next-generation NVLink switch with a bidirectional capacity of 1.8 terabytes per second that enables communication across 576 GPUs.

Because of this, Nvidia had to create a whole new network switch chip, boasting 50 billion transistors and 3.6 teraflops of onboard processing power, according to Nvidia.

A cluster of just 16 GPUs would previously, according to Nvidia, spend 60% of its time interacting with one another and 40% of its time processing.

Naturally, Nvidia is counting on businesses to purchase these GPUs in bulk. To that end, the company is packaging these GPUs in larger designs like the GB200 NVL72, which packs 72 GPUs and 36 CPUs into a single liquid-cooled rack for a total of 1,440 petaflops (or 1.4 exaflops) of inference or 720 petaflops of AI training performance. Inside are over two miles of wires, made up of five thousand separate cables.

There are two GB200 chips and two NVLink switches on each tray in the rack; there are 18 of the former and nine of the latter per rack. Nvidia claims that a 27-trillion-parameter model can be supported by one of these racks. It is estimated that GPT-4 has around 1.7 trillion parameters.

Although it’s unclear how many are being purchased, the business claims that Amazon, Google, Microsoft, and Oracle have already shown a desire to include the NVL72 racks in their cloud service offerings.

Naturally, Nvidia is also pleased to provide companies with the balance of the solution. With eight systems combined into one, the DGX Superpod for DGX GB200 boasts 288 CPUs, 576 GPUs, 240TB of RAM, and 11.5 exaflops of FP4 processing power. According to Nvidia, their systems are capable of supporting tens of thousands of GB200 Superchips, all of which can be connected to over 800Gbps networking using either the Spectrum-X800 ethernet or the Quantum-X800 InfiniBand (which supports up to 64 connections).

Since this news is coming out of Nvidia’s GPU Technology Conference, which is often almost exclusively focused on GPU computing and AI, rather than gaming, we don’t expect to hear anything regarding new gaming GPUs today. However, probably, the Blackwell GPU architecture will also drive a subsequent desktop graphics card range, the RTX 50-series.